About

- Services

- Solutions

- News

- Events

- Contact PDEL

Explore and download the latest open-source tools for data analysis and teaching resources developed as part of PDEL's work.

The widespread expansion of mobile phones in even remote parts of the developing world has opened up a flexible and cost-effective conduit through which researchers can interact with previously marginalized populations. Building on the success of mobile-based research projects run from UC San Diego, we have constructed a customizable open-source software platform that fundamentally changes the ability of researchers to gather and disseminate data to low income households worldwide. The platform is an open-source solution for the creation of SMS data loops that will enable new forms of sophisticated mobile phone-based information interventions and research. It will give researchers and graduate students the last mile capability to create systems to solicit and provide information directly to the “bottom billion.”

Our SMS messaging platform addresses a fundamental barrier to reaching remote populations in developing countries: cost. A typical study in rural Africa might cost $100 per survey to conduct, with much of that money eaten up in fuel and vehicle rentals. An SMS message typically costs 5 cents or less to send (and nothing to receive) within a developing country, and the mobile network is the most ubiquitous and robust infrastructure to have been deployed in rural Africa and South Asia.

There are currently many services that allow a large number of text messages to be sent relatively cheaply almost anywhere on earth, but these systems are not typically built to handle responses—the information flow is one way. In our SMS messaging platform, incoming and outgoing messages are generated by an in-country server, so that very low-cost communication is enabled both for researchers and respondents.

The software has been field tested in Uganda.

The system has the following features:

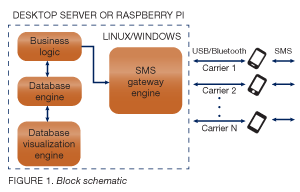

Our core architecture is based on using AT commands to communicate with cell phones or GSM modems to send and receive text messages. The setup consists of servers or Raspberry Pis connected to cell phones via bluetooth or USB. A single server could connect up to 64 cell phones. Each phone will have a SIM card that can belong to any carrier of choice. Fig 1 shows the block schematic.

Our core architecture is based on using AT commands to communicate with cell phones or GSM modems to send and receive text messages. The setup consists of servers or Raspberry Pis connected to cell phones via bluetooth or USB. A single server could connect up to 64 cell phones. Each phone will have a SIM card that can belong to any carrier of choice. Fig 1 shows the block schematic.

The SMS gateway engine is responsible for sending and receiving SMS via the cell phones. The duties of the engine include:

SMSSurvey is open-source and is now accessible to the research community through Github.

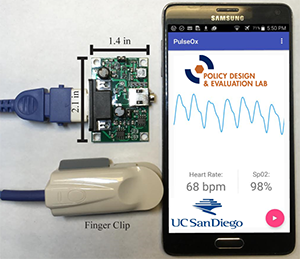

Researchers designed a smartphone-based pulse oximeter that interfaces with the phone through the audio jack, enabling point-of-care measurements of heart rate and oxygen saturation.

The device is designed to utilize existing phone resources (such as the processor, battery, and memory) resulting in a more portable and inexpensive diagnostic tool than standalone equivalents. By adaptively tuning the LED driving signal, the device is less dependent on phone-specific audio jack properties than prior audio jack-based work, making it universally compatible with all smartphones.

The device is designed to utilize existing phone resources (such as the processor, battery, and memory) resulting in a more portable and inexpensive diagnostic tool than standalone equivalents. By adaptively tuning the LED driving signal, the device is less dependent on phone-specific audio jack properties than prior audio jack-based work, making it universally compatible with all smartphones.

The research team has developed Android and iOS versions of the app. The source code is publicly available on Github.

A teaching module developed by the Policy Design and Evaluation Lab on research transparency and replicability is now available on GitHub. The goals of this module are to: 1) demonstrate the existence of a credibility crisis in the social sciences, caused by a variety of incentives and practices at both the disciplinary and individual levels; and 2) provide practical steps for researchers to improve the credibility of their work throughout the lifecycle of a project.

The module is intended for use in graduate-level social science methodology courses—including those in political science, economics, sociology, and psychology. It covers the main causes of the credibility crisis in social science, as well as practical solutions for individual researchers to address these issues in their own work.

After completing the module, students will understand the importance of transparency for the scientific enterprise. They will recognize the institutional and incentive challenges to replicable research and they will be empowered with appropriate tools to adopt replicable practices.

The materials have been tested in classrooms at UC San Diego. They can be used as a stand-alone workshop or inserted into an existing course on social science methods or research design.

Funding for this project was generously provided by the Berkeley Initiative for Transparency in the Social Sciences (BITSS) through a Catalyst Grant.

The materials have two parts. Part I reviews the causes of the credibility and replication crisis and the solutions. Part II provides an introduction to critical tools for addressing replicability, especially GitHub.

Access the materials on GitHub

These materials are licensed under Creative Commons BY-NC 4.0. You are free to share and adapt them for any non-commercial purpose with proper attribution. Please cite as follows:

Clark, J., Desposato, S., and McIntosh, C. 2017. "How to Improve the Credibility of (your) Social Science: A Practical Guide for Researchers." Policy Design and Evaluation Lab (PDEL). University of California, San Diego.

The possibility of interference between individuals has traditionally been seen as the Achilles heel of randomized experiments, because contamination of the control group by spillover effects generates impact estimates that are internally invalid. Research designs and randomized control trials that fail to account for spillovers can produce biased estimates of intention-to-treat effects, while finding meaningful treatment effects but failing to observe deleterious spillovers can lead to misconstrued policy conclusions. In many contexts, a full understanding of the policy environment requires us to measure spillover and threshold effects that are not captured by (or, worse, are sources of bias in) standard experimental designs.

This software allows a researcher to explore the statistical power of experiments to identify estimands of treatment and spillover effects when there is interference between units. We focus on settings with partial interference, in which individuals are split into mutually exclusive clusters, such as villages or schools, and interference occurs between individuals within a cluster but not across clusters. We consider experiments in which treatment is allocated using a randomized saturation (RS) design, which is a two-stage randomization procedure in which first the share of individuals assigned to treatment within a cluster is randomized, and second, the individuals within each cluster are randomly assigned to treatment according to the realized cluster-level saturation from the first stage. RS designs can be used to identify a rich set of estimands, including the treatment effect and spillover effect on untreated individuals at specific saturations, slope effects measuring how spillover effects change with respect to treatment saturation, and pooled effects across multiple saturations.

For a given RS design, our software allows a user to calculate the minimum detectable effect (MDE) of these estimands, which is the smallest value of an estimand that it is possible to distinguish from zero. The software also calculates the optimal RS design for different researcher objectives. Given a set of estimands and a set of weights specified by the researcher, the software calculates the RS design that minimizes the weighted sum of the MDEs for the specified set of estimands. Our paper establishes that introducing variation into the treatment saturation of clusters impacts the power of different estimands. These optimal design calculations allow the researcher to precisely characterize this power trade-off.

We have provided a GUI for ease of use. The video provides a tutorial on how to use it. We have also supplied Python, R, and MATLAB code.

A. Bohren, P. Staples, S. Baird, C. McIntosh, and B. Özler, (2016). Power Calculation Software for Randomized Saturation Experiments, Version 1.0. Available from http://pdel.ucsd.edu/tools/index.html