Reverse-Engineering Censorship in China

Researchers: Molly Roberts (UC San Diego), Gary King (Harvard University), and Jennifer Pan (Harvard University)

Location: United States and China

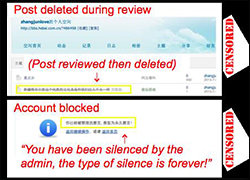

Existing research on the extensive Chinese censorship organization uses observational methods with well-known limitations. Researchers in this project conducted the first large-scale experimental study of censorship by creating accounts on numerous social media sites, randomly submitting different texts and observing which texts were censored and which were not.

Assistants in China and the United States opened 200 user accounts at 100 sites and then authored 1,200 unique posts, randomizing the assignment of different types of posts to accounts. Some commented on events involving collective action, such as volatile demonstrations over government land grabs in Fujian province. Others responded to events involving no collective action, like a corruption investigation of a provincial vice governor. For each event, the assistants authored both pro- and anti-government posts.

The study also sought to produce more reliable descriptive knowledge of how the censorship process works. The researchers created their own social media site within China, contracting with Chinese firms to install the same censoring technologies as existing sites, and thus—with their software, documentation and even customer support—reverse-engineering how it all works.

Results offer rigorous support for the recent hypothesis that criticisms of the state, its leaders, and their policies are published, whereas posts about real-world events with collective action potential are censored. Criticisms of the state are quite useful for the government in identifying public sentiment, whereas the spread of collective action is potentially very damaging.

Posts created by the team related to collective action events were between 20 and 40 percent more likely to be censored than were posts related to other topics. Posts critical of the government, on the other hand, were not significantly more likely to be censored than supportive posts, even when they called out leaders by name.